With the advancement of technology, there are various new issues and concerns that are surfacing around the world.

One of these issues is Deepfake content. Deepfake is an artificial intelligence learning technology that allows a person to be replaced with someone else's likeness in an existing image or video. Usually, this technology is often used to replace a person's face with another person's face.

Lately, there have been issues where Deepfake content has been created to feature Korean idol members in pornographic content. Since the technique of Deepfake is so advanced, there has been an increase in concern over such content.

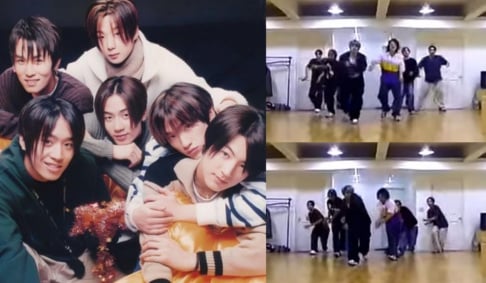

One netizen demonstrated how scary Deepfake content could be if used maliciously by posting a few captures of Deepfake video in which TWICE members' faces were replaced with other girl group members' faces.

Nayeon --> Jennie

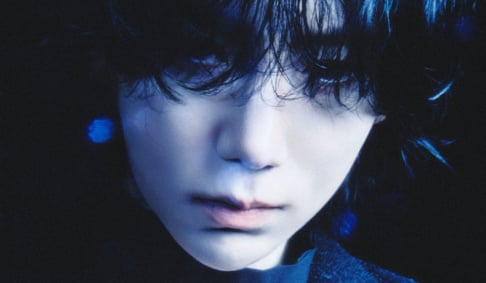

Jungyeon --> Jisoo

Momo --> Lisa

Jungyeon --> Jisoo

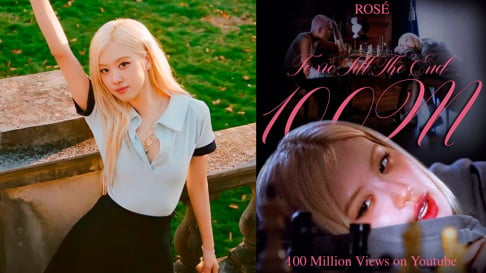

Sana --> Rose

Momo--> Lisa

Mina --> Seulgi

Tzuyu --> Joy

The photos showed TWICE members' faces replaced with the faces of BLACKPINK and Red Velvet members. The netizen who created the post in the online community explained, "These are only photos of the captures from the video, but it looks real in the video. This would be a huge issue if even ordinary people can create Deepfake content and use it with ill-intent. It would be a bigger problem if the individuals maliciously using Deepfake are not punished properly by the law. Sooner or later, people will be able to commit crimes using other people's faces."

Other netizens joined in on the conversation as they shared the great issue with Deepfake material if used maliciously. Netizens commented, "I saw in a pornographic site a video of a Deepfake video of a popular girl group member. Of course, I didn't watch it, but the thumbnail photo looked so real I was shocked," "These photos look so natural it's so scary," "I heard that 98% of these Deepfake content are used in malicious ways. Also, 25% of these Deepfake pornographic videos use Korean idol members. This is so bad. The people creating such content need to be punished," "Omg, I got goosebumps because these photos look so real," and "To be honest, the bigger issue is for ordinary people. When we see Deepfake pornographic of celebrities, most people would think it's fake, but if an acquaintance's face is used on a Deepfake video, most people would not think it's fake."

SHARE

SHARE

Deepfake really scares me. It isn’t just an issue with idols or women in general. It may be used in politics as well and wreak havoc.

I wish unauthorized usage of it would be banned.

2 more replies